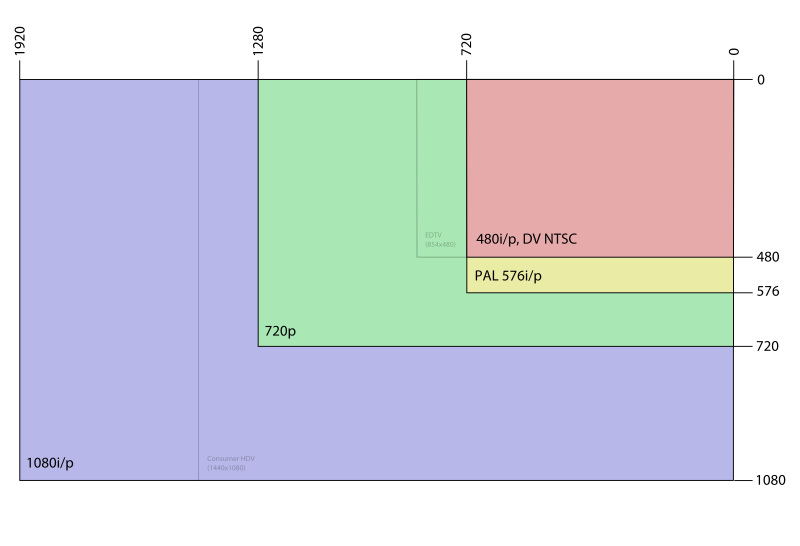

Between a game running at 1080p @ 30fps and a game running at 720p @ 60fps? And is it possible to get games running 1080p @ 60fps? And while I'm asking questions ( ), what is the difference between 1080p and 1080i?

), what is the difference between 1080p and 1080i?

Thanks

(I expect this thread to turn into a 3 page debate between Redlambs and Weaste about things the average Caf user knows nothing about)

Thanks

(I expect this thread to turn into a 3 page debate between Redlambs and Weaste about things the average Caf user knows nothing about)