Artificial Intelligence

- Thread starter Pogue Mahone

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Red Defence

Full Member

Big learning curve for Microsoft as well as for Tay. Long way to go.

Ubik

Nothing happens until something moves!

- Joined

- Jul 8, 2010

- Messages

- 19,364

So Skynet starts...on Twitter?

Actually makes a lot of sense.

Actually makes a lot of sense.

Silva

Full Member

Holy shit that's both terrifying and amazing.

@dustfingers

Just came back at home from a lecture given by Michael Jordan. That man is a fecking machine. I had the impression from that lecture that pretty much no one in the room (a few dozens of them with PhD, many professors at universities) understand most of the stuff he was doing and the way he was easily to make relations between stuff from statistics, function analysis, optimizations to each other but also to other disciplines like quantum mechanics was fascinating. In addition, he seems to speak several languages and being a great communicator.

The biggest show of intelligence I have ever seen in my life.

Just came back at home from a lecture given by Michael Jordan. That man is a fecking machine. I had the impression from that lecture that pretty much no one in the room (a few dozens of them with PhD, many professors at universities) understand most of the stuff he was doing and the way he was easily to make relations between stuff from statistics, function analysis, optimizations to each other but also to other disciplines like quantum mechanics was fascinating. In addition, he seems to speak several languages and being a great communicator.

The biggest show of intelligence I have ever seen in my life.

A decent basketball player as well.@dustfingers

Just came back at home from a lecture given by Michael Jordan. That man is a fecking machine. I had the impression from that lecture that pretty much no one in the room (a few dozens of them with PhD, many professors at universities) understand most of the stuff he was doing and the way he was easily to make relations between stuff from statistics, function analysis, optimizations to each other but also to other disciplines like quantum mechanics was fascinating. In addition, he seems to speak several languages and being a great communicator.

The biggest show of intelligence I have ever seen in my life.

Yeah, after the lecture he started dunking repeatedly a tennis ball in a bottle.A decent basketball player as well.

adexkola

Doesn't understand sportswashing.

- Joined

- Mar 17, 2008

- Messages

- 48,845

- Supports

- orderly disembarking on planes

@dustfingers

Just came back at home from a lecture given by Michael Jordan. That man is a fecking machine. I had the impression from that lecture that pretty much no one in the room (a few dozens of them with PhD, many professors at universities) understand most of the stuff he was doing and the way he was easily to make relations between stuff from statistics, function analysis, optimizations to each other but also to other disciplines like quantum mechanics was fascinating. In addition, he seems to speak several languages and being a great communicator.

The biggest show of intelligence I have ever seen in my life.

Where were you?? Lucky bastard.

Padua (Italy). I don't study there but met last week in a conference a professor of Uni of Padua and he invited me.

I must say that I didn't understood most of the stuff in the lecture but still it was quite fascinating. It also seems to me that he has become from a neural network skeptic to a hater, and now is doing more theoretical stuff. In other words he has gone full Vapnik.

An another interesting thing is that he opened the lecture in perfect Italian.

I must say that I didn't understood most of the stuff in the lecture but still it was quite fascinating. It also seems to me that he has become from a neural network skeptic to a hater, and now is doing more theoretical stuff. In other words he has gone full Vapnik.

An another interesting thing is that he opened the lecture in perfect Italian.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

This is a field I'm really interested in, it's actually based on a technique that's been around since the 1959 called Machine Learning.

Probably the most visual interpretation out there of Machine Learning, the bipeds teach themselves how to walk overtime through making mistakes:

They're using essentially super-computers now to use something called "Neural Networks" there's some really fascinating stuff out there on the subject, computers are still learning to speak and do basic interactions... it's a while off yet.

This lecture is quite interesting too, it's definitely making progress:

Probably the most visual interpretation out there of Machine Learning, the bipeds teach themselves how to walk overtime through making mistakes:

They're using essentially super-computers now to use something called "Neural Networks" there's some really fascinating stuff out there on the subject, computers are still learning to speak and do basic interactions... it's a while off yet.

This lecture is quite interesting too, it's definitely making progress:

Last edited:

sun_tzu

The Art of Bore

adexkola

Doesn't understand sportswashing.

- Joined

- Mar 17, 2008

- Messages

- 48,845

- Supports

- orderly disembarking on planes

Padua (Italy). I don't study there but met last week in a conference a professor of Uni of Padua and he invited me.

I must say that I didn't understood most of the stuff in the lecture but still it was quite fascinating. It also seems to me that he has become from a neural network skeptic to a hater, and now is doing more theoretical stuff. In other words he has gone full Vapnik.

An another interesting thing is that he opened the lecture in perfect Italian.

Why is he a hater? Does he think neural networks are being overused?

Zarlak

my face causes global warming

I read today that a Russian AI robot escaped from its facility for the second time, it was programmed to learn about its surroundings and they're thinking of switching it off because it keeps wandering off on its own.

sun_tzu

The Art of Bore

I read that they basically pushed it out the doors for some publicity as they are due to release a new robot soon...I read today that a Russian AI robot escaped from its facility for the second time, it was programmed to learn about its surroundings and they're thinking of switching it off because it keeps wandering off on its own.

He didn't explain much, but it looked for these reasons:Why is he a hater? Does he think neural networks are being overused?

1) ANN getting over-hyped (mostly by media) and the brain parallels which are nonsense (although to be fair, all main ANN researchers don't make those parallels but the media do so).

2) ANN are in reality very simple. It is just applying gradient descent into a large hypothesis and on large data. It isn't the most scientific method right there, and it is almost completely empirical (at the moment, Jordan is doing research on making the big data field a bit more scientific, not just the usual trial and error).

3) He seems to be unconvinced about their power. For example, he said that he doesn't think that they will solve the problem of natural language processing (despite that every company uses ANN for that), though he said that they will maybe solve the problem of vision (again, deep learning algorithms lead every benchmark on vision). He didn't mention other fields like autonomous driving (again, deep learning is leader) or Alpha Go.

He seems to unappreciate everything that isn't heavy on maths and so it doesn't have a very good theoretical formulation. Which might be while he left the field of neural networks in the beginning of nineties. Or he might just be on that mood for now. I read an interview of LeCun some time ago, when he said that Jordan reinvents himself every 5 years or so, changing completely the field he's working. And that is so, if you look at his career. He has a master in physics, a PhD in cognitive sciences, then started working on machine learning, did some contributions on neural networks, changed the field of study to statistical models (latent dirichlet allocation) to clustering (spectral clustering) and now doing this research on theoretical data science and accelerated methods (optimization).

A master of all trades.

Welsh Wonder

A dribbling mess on the sauce

The end is nigh.I read today that a Russian AI robot escaped from its facility for the second time, it was programmed to learn about its surroundings and they're thinking of switching it off because it keeps wandering off on its own.

EDIT: Reading again it sounds like it's basically mimicking me on a night out.

adexkola

Doesn't understand sportswashing.

- Joined

- Mar 17, 2008

- Messages

- 48,845

- Supports

- orderly disembarking on planes

He didn't explain much, but it looked for these reasons:

1) ANN getting over-hyped (mostly by media) and the brain parallels which are nonsense (although to be fair, all main ANN researchers don't make those parallels but the media do so).

2) ANN are in reality very simple. It is just applying gradient descent into a large hypothesis and on large data. It isn't the most scientific method right there, and it is almost completely empirical (at the moment, Jordan is doing research on making the big data field a bit more scientific, not just the usual trial and error).

3) He seems to be unconvinced about their power. For example, he said that he doesn't think that they will solve the problem of natural language processing (despite that every company uses ANN for that), though he said that they will maybe solve the problem of vision (again, deep learning algorithms lead every benchmark on vision). He didn't mention other fields like autonomous driving (again, deep learning is leader) or Alpha Go.

He seems to unappreciate everything that isn't heavy on maths and so it doesn't have a very good theoretical formulation. Which might be while he left the field of neural networks in the beginning of nineties. Or he might just be on that mood for now. I read an interview of LeCun some time ago, when he said that Jordan reinvents himself every 5 years or so, changing completely the field he's working. And that is so, if you look at his career. He has a master in physics, a PhD in cognitive sciences, then started working on machine learning, did some contributions on neural networks, changed the field of study to statistical models (latent dirichlet allocation) to clustering (spectral clustering) and now doing this research on theoretical data science and accelerated methods (optimization).

A master of all trades.

Everything from regression to ANN to SVMs is over-hyped. Failure is not an option. We're gonna find a pattern or die trying, is the mindset promoted by the media/industry.

The big data field is kind of like the Wild Wild West, lacking the mathematical rigour and peer review process of more mature disciplines (like statistics for example). That will come with time.

Yeah, but on regression and SVM you know exactly what is going on, and the strength is on the algorithm, which also have awesome theoretical justifications. On ANN (which I love, btw), the strength is on having a very large hypothesis and then optimizing the cost function of it. A bit like infinite monkeys writing Shakespeare (btw, the nvidia ANN for autonomous driving has more than 40 billion parameters, and probably there are bigger ANN right there).Everything from regression to ANN to SVMs is over-hyped. Failure is not an option. We're gonna find a pattern or die trying, is the mindset promoted by the media/industry.

The big data field is kind of like the Wild Wild West, lacking the mathematical rigour and peer review process of more mature disciplines (like statistics for example). That will come with time.

Agree for the last point, it is exactly like that, trial and error and hope for the best. Mr. Jordan is afraid that this will backfire (some decisions going horribly wrong, and being very costly or worse) which then will result in less money for AI, and so an another winter. He is now working on providing exactly that, some theoretical justifications. Not very interesting from an engineering point of view, but definitely needed if we want to make serious progress.

donkeyfish

Full Member

I remembered this one when I saw the thread. Not exactly something from Nature, but was a good read for a layman like me. Just to appreciate the difference from something "hard-coded".

https://www.technologyreview.com/s/...ss-in-72-hours-plays-at-international-master/

Very interesting topic indeed, cheers for this thread guys

https://www.technologyreview.com/s/...ss-in-72-hours-plays-at-international-master/

Very interesting topic indeed, cheers for this thread guys

Pogue Mahone

Swiftie Fan Club President

Bump.

SwansonsTache

incontinent sexual deviant & German sausage lover

I reckon that self consciousness and the recognition of the "I" is somewhat linked to intelligence.

I am trying to find a program I saw on Discovery where they displayed the difference of perception of self between dogs and chimps. Chimps are recgnized to be more intelligent than dogs, and they have a proven concept of self, while dogs have none. But can one claim that chimps have more "soul" than dogs, or just more intelligence?

http://www.janegoodall.ca/about-chimp-so-like-us.php

proof of self recognization in chimps, dogs doesn't possess this trait.

This is why I believe that intelligence is a product of brain, or rather brain chemistry. A soul is merely a product of conscious thought, which all stems from the brain.

I am trying to find a program I saw on Discovery where they displayed the difference of perception of self between dogs and chimps. Chimps are recgnized to be more intelligent than dogs, and they have a proven concept of self, while dogs have none. But can one claim that chimps have more "soul" than dogs, or just more intelligence?

http://www.janegoodall.ca/about-chimp-so-like-us.php

Chimpanzees are capable of reasoned thought, abstraction and have a concept of self. Chimps use reasoned thought when they process information and use their memory, for example when finding fruit according to what season it is. Chimps are capable of generalization and symbolic representation, as they are able to group symbols together, and some chimps have even learned how to use American Sign Language. Chimps also have a “concept of self”, which refers to an individual’s perception of their being in relation to others

proof of self recognization in chimps, dogs doesn't possess this trait.

This is why I believe that intelligence is a product of brain, or rather brain chemistry. A soul is merely a product of conscious thought, which all stems from the brain.

Pogue Mahone

Swiftie Fan Club President

Yeah, that's my take on it too. The more sophisticated the brain gets the more clearly it grasps the concept of "self". We've no reason to assume there's something magic about biological circuitry when it comes to conciousness that can't be replicated in a machine.

Will Absolute

New Member

Pogue says the brain is just an information processor made of meat, but I don't think that's the whole truth.

There is something magical, for want of a better word, about the brain. It wasn't wired up from a circuit diagram. The brain evolved over half a billion years into an almost infinitely complex jumble of interconnections. It's an emergent system whose behaviour as a whole cannot be deduced from a study of its individual parts. We will never understand it.

And our lack of understanding will stymie our attempts to duplicate its functions.

Our best shot, imo, is to try to echo biological evolution itself. To create clusters of virtual 'neurons' and allow them to 'evolve' in response to the challenges of their cyber-environment. Whether the result would be anything useful though..

There is something magical, for want of a better word, about the brain. It wasn't wired up from a circuit diagram. The brain evolved over half a billion years into an almost infinitely complex jumble of interconnections. It's an emergent system whose behaviour as a whole cannot be deduced from a study of its individual parts. We will never understand it.

And our lack of understanding will stymie our attempts to duplicate its functions.

Our best shot, imo, is to try to echo biological evolution itself. To create clusters of virtual 'neurons' and allow them to 'evolve' in response to the challenges of their cyber-environment. Whether the result would be anything useful though..

It is complex and we don't understand it, but I don't think that there is any reason to believe that there is something magical there, or that we won't ever understand it.Pogue says the brain is just an information processor made of meat, but I don't think that's the whole truth.

There is something magical, for want of a better word, about the brain. It wasn't wired up from a circuit diagram. The brain evolved over half a billion years into an almost infinitely complex jumble of interconnections. It's an emergent system whose behaviour as a whole cannot be deduced from a study of its individual parts. We will never understand it.

And our lack of understanding will stymie our attempts to duplicate its functions.

Our best shot, imo, is to try to echo biological evolution itself. To create clusters of virtual 'neurons' and allow them to 'evolve' in response to the challenges of their cyber-environment. Whether the result would be anything useful though..

AI already beats brain in many pattern things and logical games. It already beats average human in image recognition, it can find the voice from a silent video (something that the human brain can't) and withing 2-5 years, it will drive cars quite better than us. I don't know if we'll get 'hard AI' during our lifetime, but I think that eventually we'll get there.

PedroMendez

Acolyte

at the moment we don´t even know how to approach the creation of hard AI. I agree, that we´ll get there, but it is a lot further away than many people (who are hyping this topic) suggest.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

at the moment we don´t even know how to approach the creation of hard AI. I agree, that we´ll get there, but it is a lot further away than many people (who are hyping this topic) suggest.

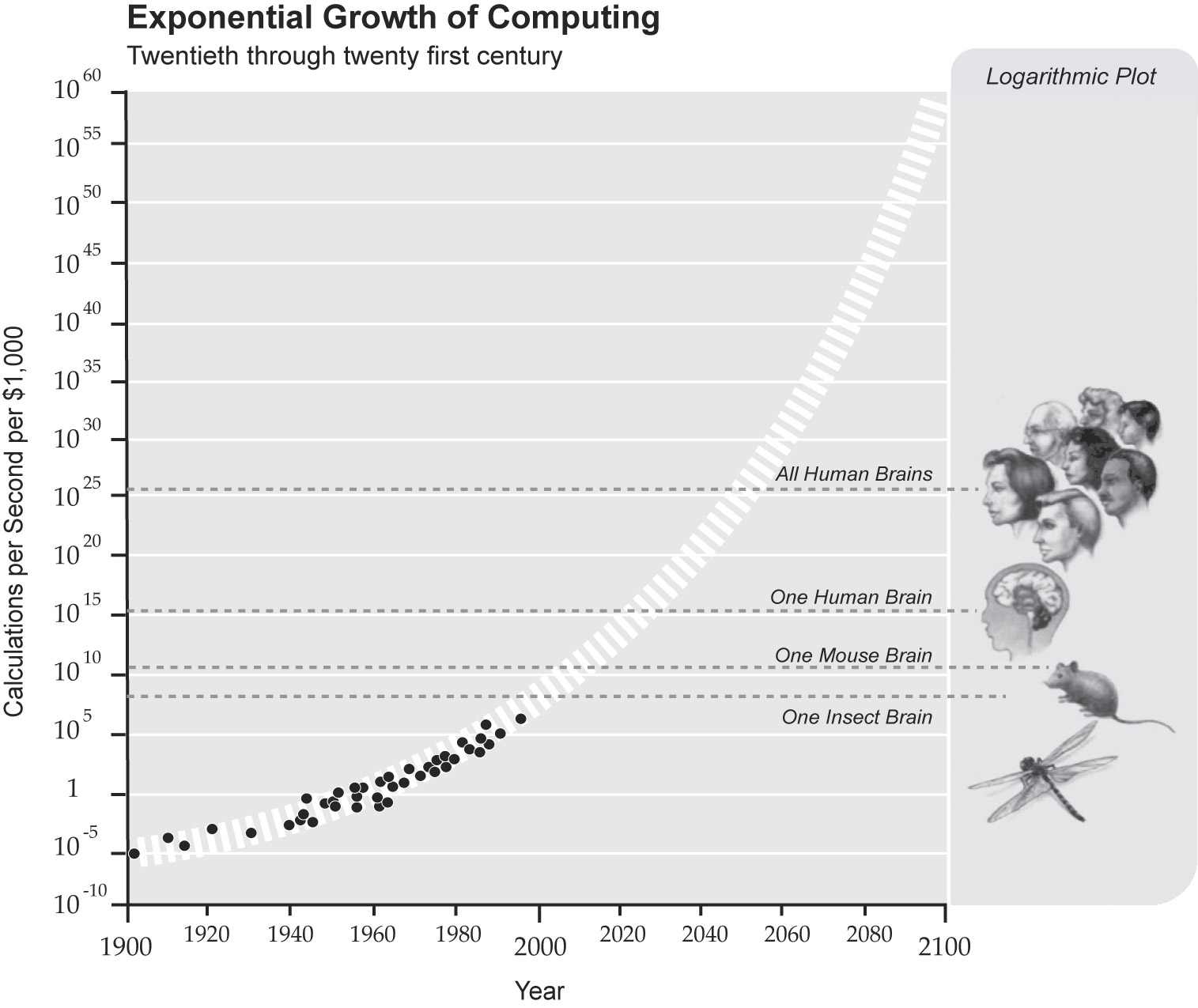

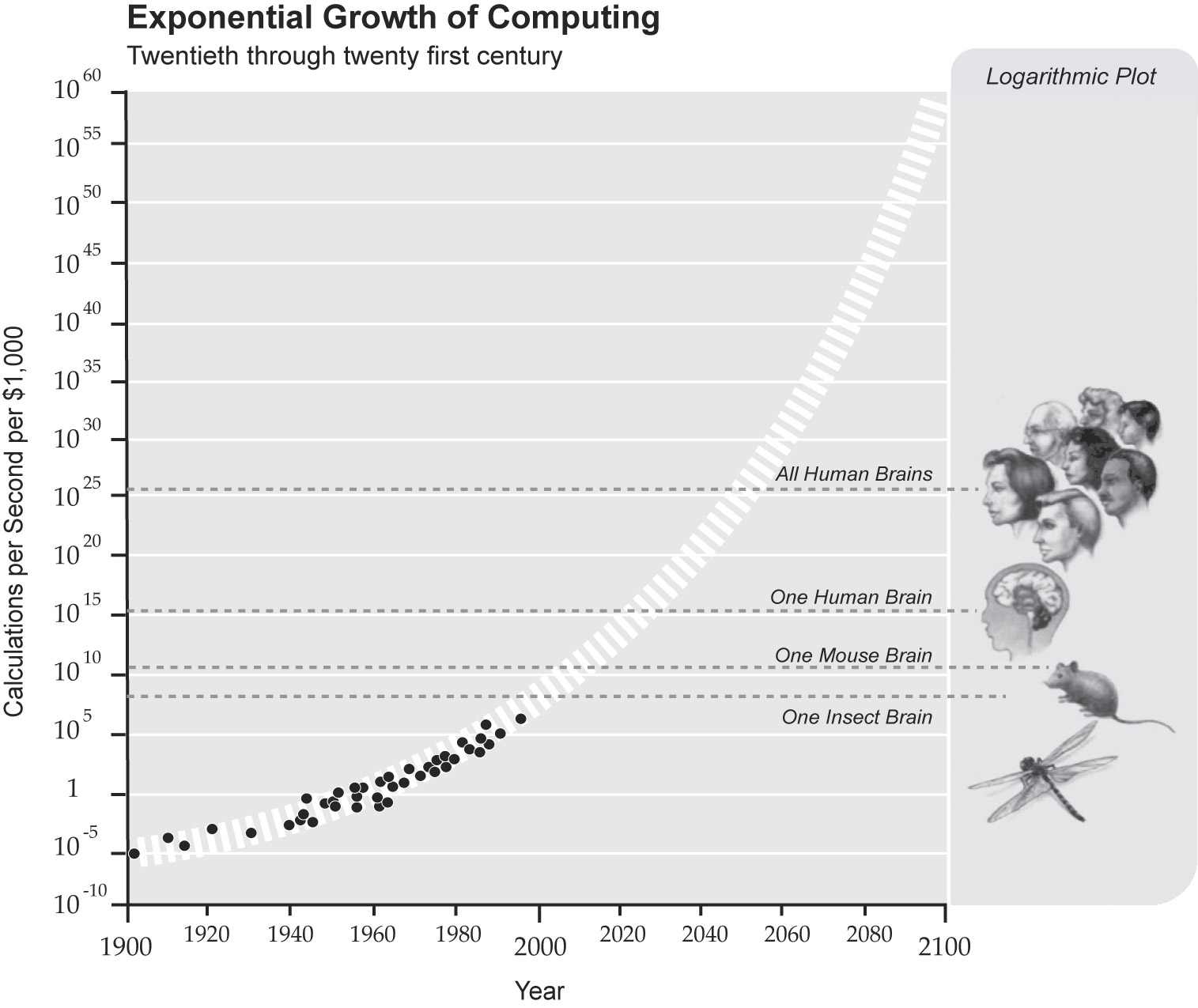

I agree but some of it depends if Moore's Law holds up, which some think will come to an end in the near future, others believe they'll just increase the volume of chip-sets and find a way to keep them cool. (3D chips).

The issue is with exponential hardware increases by 2029 we'll have a computer with the processing power of a human being, by 2045 by every single human being on the planet.

Then think that by that point we'll have much more people doing Machine Learning and Computer Science related fields as their career, they'll be much more mature in thinking and there'll be new leaps (we'll ourselves have Children who are aged <6 now coming through schools learning Computer Science from a young age, graduating from college and going into the field for 6-7 years and working on this problem).

There was a Nobel Prize panel who spoke about ASI and Singularity not so long ago, it is a case of "when", Microsofts chief basically said we're not far off 100% speech recognition, language is only a few years off, computer vision won't take longer than a decade, so some portions of what you think it is to be human is already there.

(You may believe oh but since the computers invention we haven't come that far! you're forgetting the exponential increase in processing power, it goes 2,4,8,16 etc only recently have we exceeded the brain power of things like birds, mice).

Throw in the fact 2045 if you believe Moore's Law will hold up we'll be able to run huge simulations of the human brain, Nanotechnology might be able to construct something similar to a human brain for experimentation, (we'll be atleast 15 years into a nano revolution picture NOW and the Y2K that's how far it'll be (and it was just after the year 2000 we went beyond a computer with the processing power of an insect brain).

Neuroscience will understand the brain much more by computer simulation, advanced scanning tools and medical understanding. I think by the 2040s we'll have AGI general intelligence and people like Nick Bostrom, Bill Gates, Stephen Hawking believe onces AGI is here ASI will soon follow.

For those who doubt ASI will soon follow AGI think of this, a computer who has just exceeded any living humans intelligence, can read through every single written work including scientific papers, use pattern recognition logic and reasoning within <10 seconds (a human can't even do that in a lifetime).

Now imagine the fact a human being can work maybe 6-8 hours without getting tired and slowing down (much less than that, factoring in breaks, daydreaming etc). Now think that it'll have the hardware of every human combined on the planet, imagine this AGI can function 24/7 running in multiple clusters and threads probably 100,000 + brain simulations functioning at the same time.

This AGI will be able to refine its own software, look for improvements, and Deep Learning has already demonstrated learning from mistakes (and think how by the 2040s we will have much better algorithms for this). ASI won't be long after, we're talking years, not decades.

I really believe by 2060s latest we'll have it, and I know some here believe we won't but we're talking about the same roughly between now and the 1980s when the very first PC and Walkmans were available, the acceleration is due to computational power more than anything.

For those on this thread who are young, take good care of yourselves because the 2040s-2060s are going to be mind-blowing compared to now.

Last edited:

dustfingers

Full Member

- Joined

- Aug 23, 2014

- Messages

- 492

- Supports

- MMC

He's up there with one of the greatest mind of the current Era probably outside of physics. I agree with him in terms of neural network these days though. Human brain is unsupervised or at least very weakly supervised while ANN is all about having millions of labeled samples, which probably cannot be feasible in solving practical sparse data problems.@dustfingers

Just came back at home from a lecture given by Michael Jordan. That man is a fecking machine. I had the impression from that lecture that pretty much no one in the room (a few dozens of them with PhD, many professors at universities) understand most of the stuff he was doing and the way he was easily to make relations between stuff from statistics, function analysis, optimizations to each other but also to other disciplines like quantum mechanics was fascinating. In addition, he seems to speak several languages and being a great communicator.

The biggest show of intelligence I have ever seen in my life.

What have you been working on lately? Deep learning or Bayesian? I have been doing fully unsupervised learning for last few months and it's been frustrating. But in some way it has been making me aware of the flaws of current neural networks. There was an article on rethinking unsupervised learning and I am kind of leaning towards that these days.

But I hope for the field sake that Michael Jordan doesn't turn into Vapnik. Collaborative research is most important for progress in any fields.

Last edited:

dustfingers

Full Member

- Joined

- Aug 23, 2014

- Messages

- 492

- Supports

- MMC

I don't think it's going to be all doom and gloom by 2060s. While AI probably will have a role to play in terms of determining future job markets via self driving car and other innovative ideas, but it is no different than when machine started replacing human labor after industrial Era.I agree but some of it depends if Moore's Law holds up, which some think will come to an end in the near future, others believe they'll just increase the volume of chip-sets and find a way to keep them cool. (3D chips).

The issue is with exponential hardware increases by 2029 we'll have a computer with the processing power of a human being, by 2045 by every single human being on the planet.

Then think that by that point we'll have much more people doing Machine Learning and Computer Science related fields as their career, they'll be much more mature in thinking and there'll be new leaps (we'll ourselves have Children who are aged <6 now coming through schools learning Computer Science from a young age, graduating from college and going into the field for 6-7 years and working on this problem).

There was a Nobel Prize panel who spoke about ASI and Singularity not so long ago, it is a case of "when", Microsofts chief basically said we're not far off 100% speech recognition, language is only a few years off, computer vision won't take longer than a decade, so some portions of what you think it is to be human is already there.

(You may believe oh but since the computers invention we haven't come that far! you're forgetting the exponential increase in processing power, it goes 2,4,8,16 etc only recently have we exceeded the brain power of things like birds, mice).

Throw in the fact 2045 if you believe Moore's Law will hold up we'll be able to run huge simulations of the human brain, Nanotechnology might be able to construct something similar to a human brain for experimentation, (we'll be atleast 15 years into a nano revolution picture NOW and the Y2K that's how far it'll be (and it was just after the year 2000 we went beyond a computer with the processing power of an insect brain).

Neuroscience will understand the brain much more by computer simulation, advanced scanning tools and medical understanding. I think by the 2040s we'll have AGI general intelligence and people like Nick Bostrom, Bill Gates, Stephen Hawking believe onces AGI is here ASI will soon follow.

For those who doubt ASI will soon follow AGI think of this, a computer who has just exceeded any living humans intelligence, can read through every single written work including scientific papers, use pattern recognition logic and reasoning within <10 seconds (a human can't even do that in a lifetime).

Now imagine the fact a human being can work maybe 6-8 hours without getting tired and slowing down (much less than that, factoring in breaks, daydreaming etc). Now think that it'll have the hardware of every human combined on the planet, imagine this AGI can function 24/7 running in multiple clusters and threads probably 100,000 + brain simulations functioning at the same time.

This AGI will be able to refine its own software, look for improvements, and Deep Learning has already demonstrated learning from mistakes (and think how by the 2040s we will have much better algorithms for this). ASI won't be long after, we're talking years, not decades.

I really believe by 2060s latest we'll have it, and I know some here believe we won't but we're talking about the same roughly between now and the 1980s when the very first PC and Walkmans were available, the acceleration is due to computational power more than anything.

For those on this thread who are young, take good care of yourselves because the 2040s-2060s are going to be mind-blowing compared to now.

I don't do neuroscience research but based on what I have heard from experts, it's highly unlikely we could reach full understanding of brain within next twenty or thirty years. Even consciousness is super hard to explain and will probably take decades just to understand that. Without good theory of how brain works or how intelligence is learned and acquired, no amount of machine resources is going to help in creating true intelligent system.

dustfingers

Full Member

- Joined

- Aug 23, 2014

- Messages

- 492

- Supports

- MMC

The main thing is probably how many examples brain needs and how many examples does a computer need to truly be really good at recognizing things and listening properly. The supervised learning problem is modeled as a simple curve fitting approach while minimizing the classification error which is a lot more easier and controlled than learning what the classification task is what the features are. Also it would be interesting to see how joint learning models perform like human does. And creativity is so far away from even being understood to actually see machine replicate it.It is complex and we don't understand it, but I don't think that there is any reason to believe that there is something magical there, or that we won't ever understand it.

AI already beats brain in many pattern things and logical games. It already beats average human in image recognition, it can find the voice from a silent video (something that the human brain can't) and withing 2-5 years, it will drive cars quite better than us. I don't know if we'll get 'hard AI' during our lifetime, but I think that eventually we'll get there.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

I don't think it's going to be all doom and gloom by 2060s. While AI probably will have a role to play in terms of determining future job markets via self driving car and other innovative ideas, but it is no different than when machine started replacing human labor after industrial Era.

I don't do neuroscience research but based on what I have heard from experts, it's highly unlikely we could reach full understanding of brain within next twenty or thirty years. Even consciousness is super hard to explain and will probably take decades just to understand that. Without good theory of how brain works or how intelligence is learned and acquired, no amount of machine resources is going to help in creating true intelligent system.

It's really hard to say yes or no to what'll happen, the computational power will be there to do anything. It'll have huge applications in Medicine and every other walk of life. Even if there wasn't AGI there'll be some very good applications, ANI will be beyond any human ability.

Computers are great at logic, but we tend to dismiss the huge progress that's been made in recent years. as years pass there'll be even more data to mine too which helps.

I firmly believe it'll be this century, I think it'll be no later than 2060-70 but then again it's all guess work as nobody knows, we don't know what theories will be put down when someone will have Eureka moments. Where the funding will be, that the right people go to the right schools and get the right jobs.

I don't see AGI as doom and gloom though, as-long as there's good procedures in place and the logistics are figured out.

Yes full understanding of the brain is a huge field that we don't understand still to this day, but once diseases like cancers are cured, isn't the last big mystery for humanity AI and how the brain truly functions? won't there be a lot more people researching those subjects?

Google seems to be buying a lot of AI related companies out too and looking for as many graduates as they can get in those fields too, 20+ years there's a lot of progress that'll happen we might not be at the levels forecast but I really see it happening in our lifetimes.

Last edited:

dustfingers

Full Member

- Joined

- Aug 23, 2014

- Messages

- 492

- Supports

- MMC

I think it would be truly fulfilling when we reach at an point that we truly understand human brain and we are actually able to replicate that into some form of chemicals system so that human consciousness or sense of being oneself can be transferred from brain to other mechanical parts. This wouls probably mean no disease and also attainment of immortality but probably useless human beings too. That would probably be the future someday.It's really hard to say yes or no to what'll happen, the computational power will be there to do anything. It'll have huge applications in Medicine and every other walk of life. Even if there wasn't AGI there'll be some very good applications, ANI will be beyond any human ability.

I firmly believe it'll be this century, I think it'll be no later than 2060-70 but then again it's all guess work as nobody knows, we don't know what theories will be put down when someone will have Eureka moments. Where the funding will be, that the right people go to the right schools and get the right jobs.

I don't see AGI as doom and gloom though, as-long as there's good procedures in place and the logistics are figured out.

Yes full understanding of the brain is a huge field that we don't understand still to this day, but once diseases like cancers are cured, isn't the last big mystery for humanity AI and how the brain truly functions? won't there be a lot more people researching those subjects?

Another would be using some form of flash memory to learn new subject like insert flash card into human brain and we are expert in quantum computing all of sudden. That is why the secret of unsupervised learning is so important to find out.

I don't think it is going to be doom any time though. Because everything is going to evolution rather than a revolution.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

I think it would be truly fulfilling when we reach at an point that we truly understand human brain and we are actually able to replicate that into some form of chemicals system so that human consciousness or sense of being oneself can be transferred from brain to other mechanical parts. This wouls probably mean no disease and also attainment of immortality but probably useless human beings too. That would probably be the future someday.

Another would be using some form of flash memory to learn new subject like insert flash card into human brain and we are expert in quantum computing all of sudden. That is why the secret of unsupervised learning is so important to find out.

I don't think it is going to be doom any time though. Because everything is going to evolution rather than a revolution.

Just to reply to you RE: yesterday, and the whole 2040s-2060s prediction.

Do you not think when a computer has the right algorithm and the processing power of collectively every human on the planet (after every human on the planet the next year again is another exponential increase). Do you not think it only takes the right idea or algorithm for there to be an explosion at that point?

We're talking within a day or a week with the right idea it could really do some serious learning and development, we have to think about the power available at that point too.

I'm talking about intelligence here, not motor functions or dexterity, I think that'll be done in the 30s, I'm talking about true intelligence and learning here.

Right now we see limitations because we're only at around one mouse brain, so its really ANI.

I just see as AI gets better in the next 10 years, more graduates will switch from doing Web, Software engineering to pure AI, and Universities will start to run courses solely in AI too. That again is another leap.

Last edited:

Will Absolute

New Member

Right now we see limitations because we're only at around one mouse brain, so its really ANI.

I don't know much about this issue except in philosophical terms, so I'm wondering what's meant by this. Are you saying there's a machine out there that, provided all components could be appropriately miniaturized, can perform all the functions of a mouse? Would be indistinguishable in its behaviour from an advanced biological organism? Or do you mean it has the processing capacity of a mouse brain?

It seems to me that this distinction is not properly respected by advocates of AI. By analogy, it's like assembling all the chemical constituents of a human body, dumping them on the floor, and saying: 'There, that's a human being.' But the trick is in the interconnection of the component parts.

Brute processing capacity is clearly not the key to brain function. And it doesn't work by algorithms. The idea that stumbling on the right algorithm will magically transform a mass of dead circuitry into an intelligent entity is no more than an act of faith. And probably a misplaced one.

As far as we know the only thing capable of intelligent thought in the Universe is the biological brain. AI seems to be largely an attempt to arrive at the destination of intelligent functioning by taking a different route. But there may be no other route.

To me it seems likely that the architecture of the brain is inseparable from its function. Intelligence is what a brain does. The two cannot be separated. It's a unique one-to-one mapping.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

I don't know much about this issue except in philosophical terms, so I'm wondering what's meant by this. Are you saying there's a machine out there that, provided all components could be appropriately miniaturized, can perform all the functions of a mouse? Would be indistinguishable in its behaviour from an advanced biological organism? Or do you mean it has the processing capacity of a mouse brain?

It seems to me that this distinction is not properly respected by advocates of AI. By analogy, it's like assembling all the chemical constituents of a human body, dumping them on the floor, and saying: 'There, that's a human being.' But the trick is in the interconnection of the component parts.

Brute processing capacity is clearly not the key to brain function. And it doesn't work by algorithms. The idea that stumbling on the right algorithm will magically transform a mass of dead circuitry into an intelligent entity is no more than an act of faith. And probably a misplaced one.

As far as we know the only thing capable of intelligent thought in the Universe is the biological brain. AI seems to be largely an attempt to arrive at the destination of intelligent functioning by taking a different route. But there may be no other route.

To me it seems likely that the architecture of the brain is inseparable from its function. Intelligence is what a brain does. The two cannot be separated. It's a unique one-to-one mapping.

The idea put down though is that when the right idea happens it won't take long to be a reality, computers learn much faster than humans do.

Think about humans learning any task, we learn from repeating the same task over and over again until we understand it, even then we forget it after a number of years, do computers? probably not.

The brain is made up of links:

A scientist here explains how a neuron in your brain might store a picture of Nicole Aniston and you'll have links associated with that, so you'll hear the word spoken "Nicole Aniston" your brain will then see the image of Nicole Aniston and associates that sound with the word. The Psychological term "Conditioning" explains this a little also.

That's why learning languages is kind of difficult and takes a lot of time, you need to now link the word Apple with the German word for Apple, and all these other thousands of words, and then your brain needs to figure out how to not literally translate things.

The point being above the human brain needs to build up these links, a computer can just read through vast amounts of information, but I don't believe until we have the technology and understanding we'll be there for minimum 20 years for some sophisticated AI near human intelligence.

The thing with any computer intelligence is when the right approach is found it won't take 30 years to put it through school, take it to college, it'll take days or weeks.

We're still decades off that point though, but we'll atleast have a computer that has 100% voice recognition, 100% computer vision, 100% language capability even by the mid 2020s at the latest.

I don't feel brute force is the right option either, but to be quite honest with you I don't think a super intelligent AI will be as smart as human beings, it won't be conscious in my opinion (not for quite a while).

We don't understand enough of the brain as people say, if we did, we could in theory create our own animals. different creatures and limit their behaviours (if we understand how the brain works). This isn't limited to ASI.

Have you ever come across The Chinese Room experiment?

My point is merely the following in terms of processing power, the more processing power we have available the quicker a computer can learn, that's why I say now it's similar to a mouse-brain in processing power we can expect it to play simple games, be able to compute languages from the cloud, but it doesn't have the hardware available right now to be able to do vast amounts of data crunching in my opinion.

Maybe I'm wrong but I think the reason the prediction is so far off is also to do with hardware, and computer hardware by the way has a knock on effect to every other scientific field.

Highlighting the Chinese Room above I feel will it truly understand? that's the question.

Last edited:

proof of self recognization in chimps, dogs doesn't possess this trait.

They proved last year (IIRC) that dogs do possess that trait, its just harder to spot.

The concern for me isn't an AI that has any deliberate intent to cause harm but just dangerous unpredicted emergent behaviour, Working with even the very simple AI used in video games, you see this all the time. Very simple AI routines bump against each other and produce totally unpredicted results. The more complex AI routines get, the harder it'll be to predict what possible outcomes you might see and to mitigate them.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

The concern for me isn't an AI that has any deliberate intent to cause harm but just dangerous unpredicted emergent behaviour, Working with even the very simple AI used in video games, you see this all the time. Very simple AI routines bump against each other and produce totally unpredicted results. The more complex AI routines get, the harder it'll be to predict what possible outcomes you might see and to mitigate them.

Game AI isn't the most sophisticated AI out there though, plus they're probably poorly optimised, if you want to see good route finding AI look at driver-less cars. (linked below very good watch by the way).

Anyway I think even if we don't have True AI in the next 20 years we'll have a rapidly evolved planet due to AI (I still believe we'll be close I said reasons why in this thread).

Off from your point Kent though just a grain of thought to people in this thread.

One of the biggest projects I'm excited by is that of Craig Venter who helped unravel the human genome in 2001.

He's recently in the last few years founded Human Longevity Inc and he and his team are sequencing a human genome every 150 seconds or so. They are hoping to do 5 Million in 5 years currently they have the capacity to do 100,000 in one year.

The reason this is great is they have hired the creator of Google Translate who has worked on that for nearly a decade to basically work with the genome and analyse it, this could mean you are a blood sample in the future away from a computer being able to go the sequence "X Y Z B L" corresponds to pancreatic cancer! you can just detect it without going into a doctors surgery and saying your symptoms.

Health care is going to change massively and we can thank big data and machine learning again to that. Google are also doing a similar thing with Google Calico who are looking at analysing health records but we know very little about Calico it's a very private company.

I'm most excited about the health care benefits than I am about Singularity.

Certainly exciting times ahead and it is partly due to AI and computational power that we can do all this without having to have lots of humans individually sequencing all of this, the more data the more accurate the results.

There's one in the latter stages of this video too that shows the computer being able to sort millions of images by learning what something looks like I'm fascinated by machine learning but I wonder what other techniques will be available in the not so distant future.

https://www.ted.com/talks/jeremy_ho...ying_implications_of_computers_that_can_learn

I just love the automation side, but again as we talk about in this thread it's pattern detection at this stage and not intelligence.

Game AI isn't the most sophisticated AI out there though, plus they're probably poorly optimised, if you want to see good route finding AI look at driver-less cars. (linked below very good watch by the way).

That's kind of my point though, that they're usually extremely unsophisticated yet even then the challenge of foreseeing how relatively simple routines will interconnect in any given situation is extremely challenging. The more complex and interconnected systems become, I struggle to see how it could even be possible to foresee any potential negative outcome. In a video game that doesn't really matter, but in systems that could be responsible for say infrastructure, the dangers are unimaginable.

TheNewEra

Knows Kroos' mentality

- Joined

- Jan 20, 2014

- Messages

- 8,669

That's kind of my point though, that they're usually extremely unsophisticated yet even then the challenge of foreseeing how relatively simple routines will interconnect in any given situation is extremely challenging. The more complex and interconnected systems become, I struggle to see how it could even be possible to foresee any potential negative outcome. In a video game that doesn't really matter, but in systems that could be responsible for say infrastructure, the dangers are unimaginable.

Game Developers don't really care about AI though.

Most games are multiplayer so it's not really in their budget, they'd much rather have nice level design, environments, visuals then have good AI.

Game Developers are looking at fun mainly. AI doesn't really factor in.

- Joined

- Oct 22, 2010

- Messages

- 22,679

The reason this is great is they have hired the creator of Google Translate who has worked on that for nearly a decade to basically work with the genome and analyse it, this could mean you are a blood sample in the future away from a computer being able to go the sequence "X Y Z B L" corresponds to pancreatic cancer! you can just detect it without going into a doctors surgery and saying your symptoms.

I'm not a fan of a heavily data-driven genomics approach for all diseases, especially if they are ignoring epigenetics altogether in their sequencing (and at those speeds they must be). At the end of the day each polymorphism you find significantly different in cancer patients vs population will mean a higher cancer probability by some fraction of a percent. At least that's what I've seen after attending many talks by these genomics guys.

Integrating a genomics+epigenomics result with a mechanism (why this gene, why this mutation) is IMO better.

But then I don't work on diseases, and I'm really ignorant about a lot of the newer data methods, so I could be wrong.

Share: